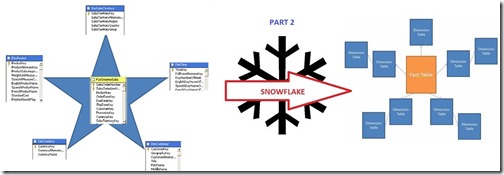

When and How to Snowflake Dimension Sources : SSAS Design Part 2

Reposted from Jason Thomas blog with the author's permission.

As a response to my previous post, one of my readers asked me this question – “ why are you hell-bent on making a star schema? You yourself have told that a snowflake schema would give the best performance during cube processing and once the cube is processed, it doesn’t matter which schema was used – the query performance would be the same. So make the designer’s life easier by making all the dimension attributes in 3NF, obviously nothing can go wrong there! “

Well, I have been extremely lucky to work along with a highly technical support & maintenance team at the client site, who challenges and makes me give appropriate reasons for every design decision I take. We as a vendor develop our applications and pass it on to the support team for the final code review and acceptance (this is in addition to the UAT or data testing part which would be done along with the business users). This made me think of them as two separate customers with two different priorities for the same application. If I have to sell my application to them, I need to appease both of them and the only way I can do is by doing a fine balancing act. The Business Users would want the best performing system (both in terms of cube processing as well as query performance) while the Support Team would want a system which is easier to support and maintain. If I designed all my attributes in 3NF, the support team would straightaway reject the application as they wouldn’t make sense what is happening in the DSV with the hundreds of attributes and relations. Since my cubes are usually less than 50GB, the processing time difference between the two schemas is less than 15 minutes which my business users would not even notice. In this case, it makes sense to use a star schema and appease my other customer, the Support Team. Your support team would love you for the additional work you do in your DSV’s diagram organizer when you neatly show them the star schemas for every fact.

In my last post, I had quickly summarized some of the best practices that I apply in the design of my dimension sources and also explained the first scenario in which I would snowflake the dimension sources for the DSV. The three scenarios as well as the example product dimension are repeated below for quick reference

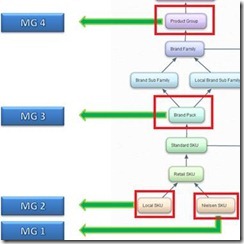

Scenario 2 – Multiple facts at multiple granularity

Consider the example in the above figure where there are facts coming in at multiple levels of the same dimension (at Prod Group, Brand Pack, Local SKU and Nielsen SKU levels). In this scenario, all the members of the levels at which the fact comes are needed and hence, the following views are made

a) Individual views for each of the level at which fact granularity comes – For each level at which the fact comes, a view is made which will contain all the attributes / entities till the next entity where one of the three scenarios’ happen. Since there are 4 levels at which fact comes in this example, the following views are made

// View for Local SKU and attributes

SELECT

// Scenario 3 at Retail SKU

Local_SKU, RSKU#FK

FROM

<table>

// View for Nielsen SKU and attributes

SELECT

// Scenario 3 at Retail SKU

Nielsen_SKU, RSKU#FK

FROM

<table>

// View for Brand Pack attributes

SELECT

// Scenario 2 at Product Group

BrandPack, BrandSF, LocalBrandSF, BrandFamily, RPCT, RPCTGrp, PG#FK

FROM

<table>

// View for Product Group attributes

SELECT

ProductGroup, ReportingProductGroup

FROM

<table>

Some things to note here

-

If the entity for which the view is made is the lowest level of the dimension or if the entity’s immediate child is having a mandatory relationship with it, then there is no need to include the Unknown Member. Else, we would have to provide the Unknown Member for that entity. (A mandatory relationship indicates that for every occurrence of entity A there must exist an entity B, and vice versa)

-

There is an overlap of Scenario 1 and Scenario 2 for Local SKU and Nielsen SKU views but the resultant views are the same. These views are repeated in this post just for completeness of Scenario 2.

-

In the view for Brand Pack attributes, it might look like Scenario 3 applies at Brand Family level. But Scenario 3 needs an extra condition to be satisfied which is not done here. This will be explained in detail in the next post.

-

General rule for each individual view in Scenario 1 and Scenario 2 is to select all the attributes from the table which has children at the lowest level of that view. For eg, in the view for Brand Pack attributes, all Brand Sub Families would be present which have Brand Packs. If there is a Brand Sub Family which doesn’t have a Brand Pack, it will not be present in the view. But that particular value of Brand Sub Family can be safely omitted as there are no facts coming in at a Brand Sub Family level and hence it would not be needed for analysis (in most of the cases). This general rule would not be applicable to Scenario 3 and would be explained in the next post.

Meanwhile, as usual, let me know till what level you agree or disagree on these points.

|

Jason has been working with Microsoft BI tools since he joined the IT industry in 2006. Since then he has worked across SSAS, SSRS and SSIS for a large number of clients. He is currently working for Mariner and is based out of Charlotte, NC. His personal blog can be found at http://road-blogs.blogspot.com |

Tags: design